What if we figured the cloud is just another data center like any other?

I work daily on Amazon's cloud infrastructure (AWS for short), and I'm thinking about the best way to implement new technical solutions on this platform.

Amazon, and the cloud in general, has brought an impressive freedom:

- Deploy on tailor-made infrastructures

- Bringing elasticity to infrastructures

- Benefits from machines on demand

However, these new approaches to infrastructure have also brought their share of negatives.

Previously, to deploy an application in the data center, it was necessary to prepare and properly size its hosting before even starting any deployment, linked to the purchase of suitable hardware, or at least to a resource reservation that had to be amortized over several years.

Cloud computing has brought a new paradigm, in fact, if I want a machine, I click a button and in a few minutes, a machine is already ready to run. That's what has contributed enormously to the success of cloud adoption: deploying quickly and easily.

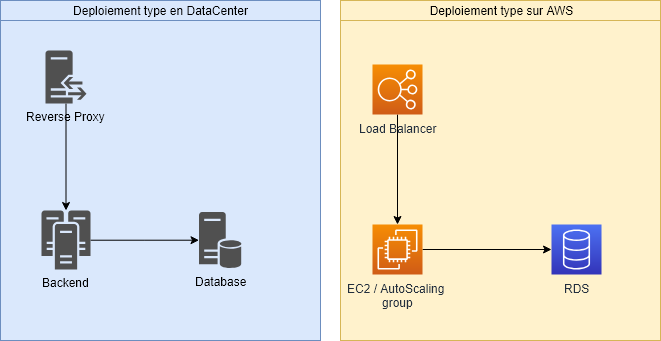

Yet we see similar patterns being replicated whether we're in a data center or on AWS. Each time we find the same application layers with the same functionality, the Load balancer / Backend / Database model is for example very similar.

I think that this facility has made us lazy.

A few days ago, I still see myself debating the dimensioning of a machine that an application required, 64Gb of RAM and 8 vCPU, for an application that provides an interface for a few users, which after all doesn't do much. When I mentioned the fact that I thought the application requirements were oversized for what was expected, the answer I got was "we're in the cloud, we can have these resources, why deprive ourselves of them".

Indeed, one can easily have access to a machine that corresponds to this dimensioning (which was the machine I indicated in the architecture).

Nevertheless, we can wonder when we came to think that optimizing our code was optional, under the pretext that the cloud can provide us with the resources we need.

I think the fact that we don't see the price of the machine directly contributes to this aspect, since the impact seems to be nil, it is basically just information.

And if we started thinking differently about the cloud, and thought that in the end, it's only servers, hosted elsewhere, that we pay as much as those in the datacenter, but at a lower cost, would we tolerate this laxity on the creation of new applications just as easily?

Solutions exists.

The serverless model proves it to us, complex and highly demanding applications can be seen differently.

The current logic is no longer to see the application as an indivisible whole, but rather to divide it into several unitary components. This is what is more commonly known as the micro-service model, the idea is to say that a service has only one role, which is part of a whole, which is the application itself.

This model makes it possible to decouple the application in addition to allowing :

- To create standard entry points, which simplifies interfaces with other applications.

- Parallelise certain actions that do not have direct dependencies, and thus improve overall performance.

- To switch to a micro container model, or managed services like AWS Lambda quite easily, and to migrate easily to a serverless model.

These changes always require work, and therefore investment, but over time, profitability can be quite high.

What if we think that the cloud is just another data center, and we start to choose more wisely how to implement our applications?

Comments ()