Accelerate the test of your lambda functions with Docker

Lambda is a very powerful AWS tool. Executing scripts in serverless mode drastically reduces the cost and complexity of managing a scalable infrastructure, however, testing its functions directly on Lambda can sometimes be frustrating as it requires round trips between the development station and the AWS environment.

There are testing features built into the AWS toolkit for the most popular editors (for Microsoft Visual Studio Code / PyCharm, for example), however, this restricts the possible editors and creates an adherence that is not particularly desirable.

Today, I propose to see how to test your Lambda functions simply with Docker.

For the purpose of this post, I will do my explanations based on a Python3 runtime environment, which corresponds to a language I master. To do the same actions in the language you want, I invite you to refer to the documentation of the docker image.

Lambci/lambda: an image to test them all!

Lambci/Lambda is an image available on dockerhub to simulate a Lambda runtime environment.

This image will allow us to emulate a lambda function locally, for testing purposes.

It is also possible to simulate a web server to recreate a lambda behind an ALB or a Gateway API.

Preparing my working environment

This step is purely optional, it consists in simply creating a work tree allowing me then to create my lambda package in .zip.

Create a directory for my test

Good python development practice as a general rule, I will create a directory in which I will put my script, my possible layers and my requirement.txt file, containing the third party libraries I will need for my test. So I created the following tree structure :

. ├── lambda │ ├── main.py │ └── requirements.txt └── layers

The /lambda tree will allow me to create my lambda script and indicate its requirements, the /layers will allow me to create my layers, which I will tackle in a second step.

Creating a first lambda, with an external library

I will create a simple lambda function that will use the python requests library to make an http call to my site. This library is not installed as a base on lambda, so I should install it myself.

#!/usr/bin/env python3

import requests

def lambda_handler(event, context):

r = requests.get('https://tferdinand.net')

print('Return code : {}'.format(r.status_code))

if r.status_code == 200:

return 0

else:

return 1

The code is very simplistic, but that's not what this post is about.

Running my python code in a lambda context

Now that my function is ready (and what a function!), it's time to test it.

First, I will fill my lambda/requirements.txt file with the only line "requests", as this module is not present in lambda.

Create a new image with my libraries and code

To do this, I will create a new "Dockerfile" file at the root of my development directory.

. ├── Dockerfile ├── lambda │ ├── main.py │ └── requirements.txt └── layers

In this Dockerfile, I will:

- Start from the lambci/lambda image in version Python3.8

- Copy my code into the image

- Install my python requirements

FROM lambci/lambda:python3.8

COPY ./lambda/ .

USER root

RUN pip install -r requirements.txt

Once I have created my Dockerfile, I can launch the creation of my docker image.

docker build . -t lambda_test_tferdinand.net:v1.0

Then, I just have to execute my lambda function by launching the container with the name of my lambda handler, in my case: main.lambda_handler.

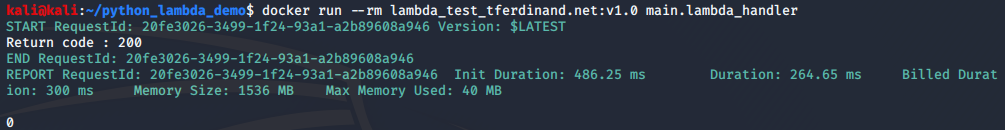

docker run --rm lambda_test_tferdinand.net:v1.0 main.lambda_handler

As you can see, the same information is retrieved as in a classic lambda execution, with memory consumption and execution time.

Moreover, you can see the "print" I had put in my script as well as the return code of the lambda function.

Mount the code directly into the container

In case only my code evolves, but not my dependencies, we can also save some time for future testing by building a docker image with the dependencies and simply mounting the directory containing our code.

The Dockerfile

FROM lambci/lambda:python3.8

COPY ./lambda/requirements.txt .

USER root

RUN pip install -r requirements.txt

I then construct the image in the same way:

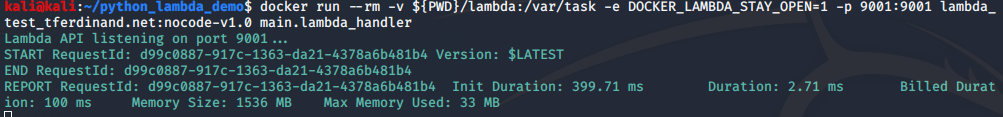

docker build . -t lambda_test_tferdinand.net:nocode-v1.0

And all I have to do is punch in my code at the execution:

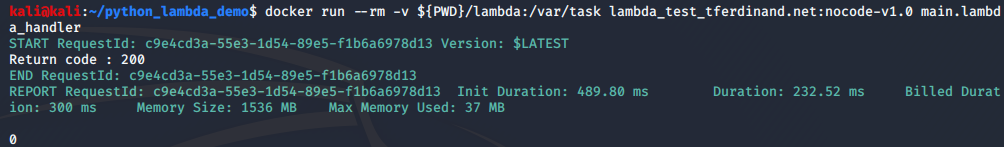

docker run --rm -v ${PWD}/lambda:/var/task lambda_test_tferdinand.net:nocode-v1.0 main.lambda_handler

As you can see, the result is exactly the same.

Both methods are viable, I leave it up to you to judge which one is best suited to your needs.

Add a layer

Lambda allows the addition of layers, these allow to load dynamically in Lambda functions shared between several functions.

The docker image also allows to perform tests with layers if needed.

I'm going to modify my little script so that it exploits a function that I'm going to place in a layer, then test it in the same way.

First, I'll create my layer in ./layers/http_caller/main.py

#!/usr/bin/env python3

import requests

def call(url):

r = requests.get(url)

print('Hello from my layer!')

print('Return code : {}'.format(r.status_code))

if r.status_code == 200:

return 0

else:

return 1

Nothing extraordinary, still a simple demonstration code.

Then, I will modify my code in lambda/main.py to invoke the function of this layer :

#!/usr/bin/env python3

import sys

sys.path.append('/opt')

from http_caller.main import call

def lambda_handler(event, context):

return call('https://tferdinand.net')

The addition of the "/opt" directory is a prerequisite because AWS Lambda positions the layers in this directory.

At this time, I have the following tree structure:

.

├── Dockerfile

├── lambda

│ ├── main.py

│ └── requirements.txt

└── layers

└── http_caller

└── main.py

Finally, I can execute my code:

- Either by recreating a new Dockerfile

FROM lambci/lambda:python3.8

COPY ./lambda/ .

COPY ./layers/ /opt/

USER root

RUN pip install -r requirements.txt

- Or by executing it directly:

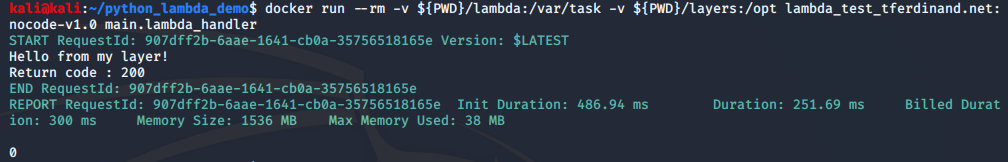

docker run --rm -v ${PWD}/lambda:/var/task -v ${PWD}/layers:/opt lambda_test_tferdinand.net:nocode-v1.0 main.lambda_handler

As you can see, my code executed correclty and loaded my layer well.

Run an API and get the context

It is also possible to emulate a web server to reproduce the behavior of a Gateway API or an ALB in front of my lambda function.

To perform this test, I will modify my code so that it returns the event it received:

#!/usr/bin/env python3

def lambda_handler(event, context):

return {

"statusCode": 200,

"body": event

}

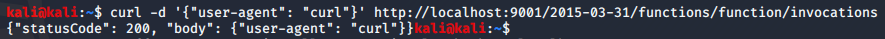

Then I'm going to invoke one more time my lambda function, adding two parameters:

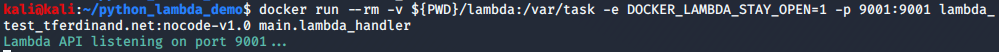

- I'll change the environment variable "DOCKER_LAMBDA_STAY_OPEN" to 1 to indicate to leave lambda in API mode, it's one of the features of the image to emulate the server itself.

- I will open the port of the said API and link it to my host.

docker run --rm -v ${PWD}/lambda:/var/task -e DOCKER_LAMBDA_STAY_OPEN=1 -p 9001:9001 lambda_test_tferdinand.net:nocode-v1.0 main.lambda_handler

So, as you can see, my lambda is ready to be invoked.

Note that it must be invoked as documented in the official documentation "Invoke"

curl -d '{"user-agent": "curl"}' http://localhost:9001/2015-03-31/functions/function/invocations

Note that I sent a payload that you can see in the "-d" to simulate a lambda event.

You can see that my lambda was well executed and that my payload is clearly visible in it.

On the other side, you can see the execution times and memory consumption, as usual.

In conclusion

The lambci/lambda docker image allows you to quickly and efficiently test your lambda functions.

It allows you to quickly validate executions during development, but can be completely integrated into a deployment pipeline for integration testing.

I have covered only a small part of the possibilities of this image here, and I invite you to go to the associated page on DockerHub if you want more information!

Feel free to react in the comments to give your opinion on this image.

Comments ()